Projects

Read about our current projects on learning through the eyes of a child, concept learning, compositional generalization.

Learning through the eyes and ears of a child

Key people: Emin Orhan, Wai Keen Vong, Wentao Wang, Najoung Kim, and Guy Davidson

Key people: Emin Orhan, Wai Keen Vong, Wentao Wang, Najoung Kim, and Guy Davidson

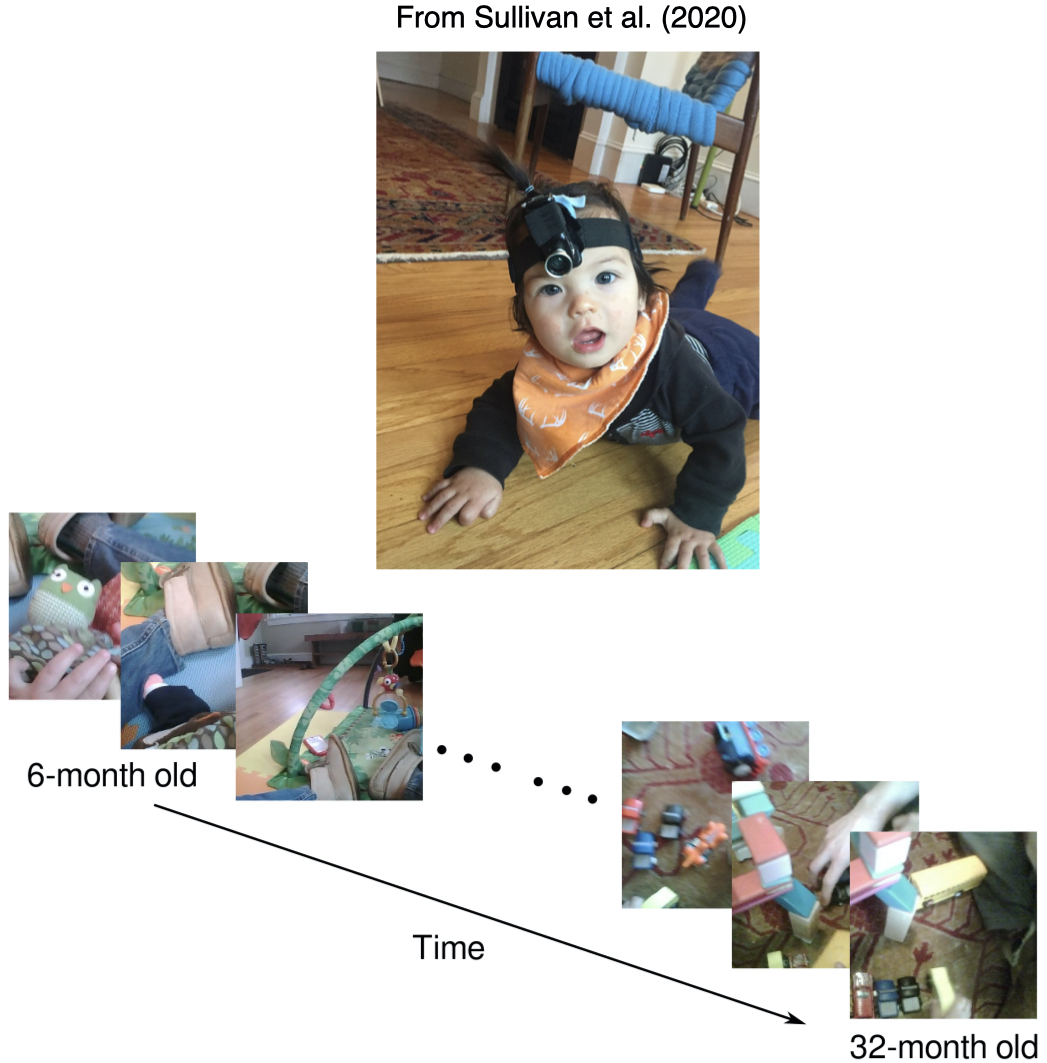

Young children have wide-ranging and sophisticated knowledge of the world. What is the origin of this early knowledge? How much can be explained through generic learning mechanisms applied to sensory data, and how much requires more substantive innate inductive biases? We examine these nature vs. nurture questions by training large-scale neural networks through the eyes and ears of a single developing child, using longitudinal baby headcam videos (see recent dataset from Sullivan et al., 2020).

Our results show that broadly useful visual features and high-level linguistic structure can emerge from self-supervised learning applied only to a slice of one child’s experiences. Our ongoing work is studying whether predictive models of the world can also be learned via similar mechanisms.

Vong, W. K., Wang, W., Orhan, A. E., and Lake, B. M (2024). Grounded language acquisition through the eyes and ears of a single child. Science, 383, 504-511.

Orhan, A. E., and Lake, B. M. (2024). Learning high-level visual representations from a child’s perspective without strong inductive biases. Nature Machine Intelligence, 6, 271-283.

Wang, W., Vong, W. K., Kim, N., and Lake, B. M. (2023). Finding Structure in One Child’s Linguistic Experience. Cognitive Science, 47, e13305.

Neuro-symbolic modeling

Key people: Reuben Feinman

Key people: Reuben Feinman

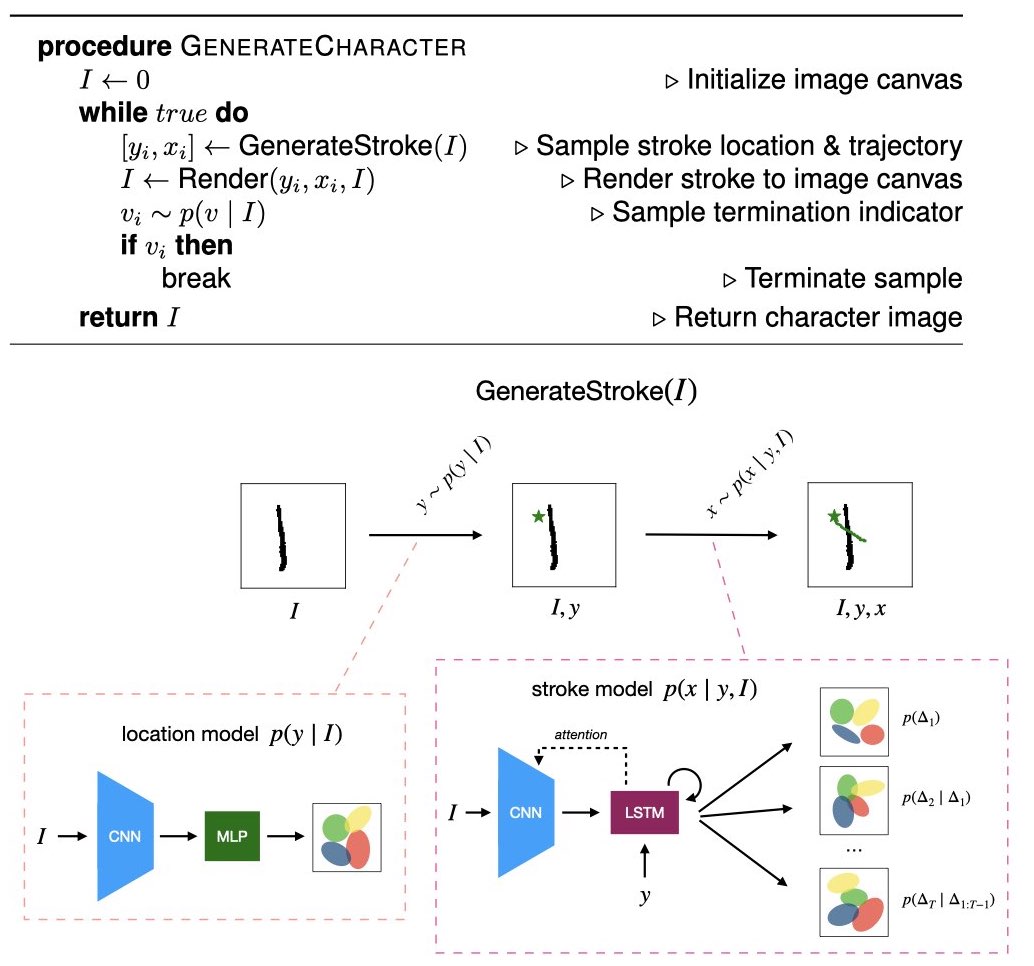

Human conceptual representations are rich in structural and statistical knowledge. Symbolic models excel at capturing compositional and causal structure, but they struggle to model the most complex correlations found in raw data. In contrast, neural network models excel at processing raw stimuli and capturing complex statistics, but they struggle to model compositional and causal knowledge. The human mind seems to transcend this dichotomy: learning structural and statistical knowledge from raw inputs.

We are developing neuro-symbolic models that learn compositional and causal generative programs from raw data, while using neural sub-routines for powerful statistical modeling (see diagram). We aim to better understand the dual structural and statistical natures of human concepts, and to learn neuro-symbolic representations for machine learning applications.

Zhou, Y., Feinman, R., and Lake, B. M. (2024). Compositional diversity in visual concept learning. Cognition, 244, 105711.

Feinman, R. and Lake, B. M. (2020). Learning Task-General Representations with Generative Neuro-Symbolic Modeling. International Conference on Learning Representations (ICLR).

Feinman, R. and Lake, B. M. (2020). Generating new concepts with hybrid neuro-symbolic models. In Proceedings of the 42nd Annual Conference of the Cognitive Science Society.

Compositional generalization in minds and machines

Key people: Yanli Zhou, Laura Ruis, Max Nye, and Marco Baroni

Key people: Yanli Zhou, Laura Ruis, Max Nye, and Marco Baroni

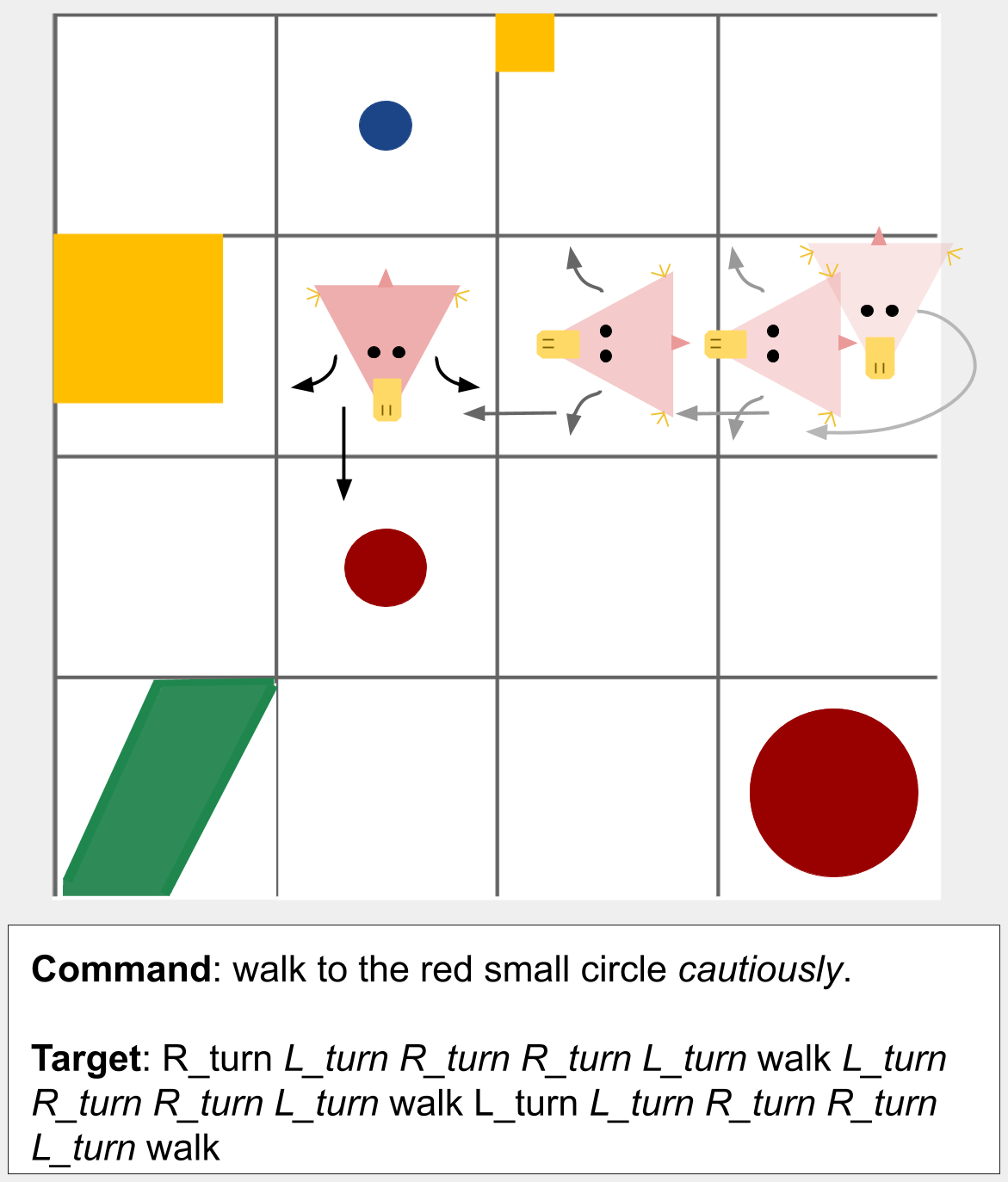

People make compositional generalizations in language, thought, and action. Once a person learns how to “photobomb” she immediately understands how to “photobomb twice” or “photobomb vigorously.” We have shown that, despite recent advances in natural language processing, the best algorithms fail catastrophically on tests of compositionality.

To better understand these distinctively human abilities, we are studying human compositional learning of language-like instructions. Based on behavioral insights, we are developing novel meta-learning and neural-symbolic models to tackle popular compositional learning benchmarks. Additional work focuses on learning compositional visual concepts and developing more challenging benchmarks for AI, e.g., few-shot learning of concepts such as “cautiously” (see image of “walking to the small red circle cautiously,” which requires looking both ways before moving).

Lake, B. M. and Baroni, M. (2023). Human-like systematic generalization through a meta-learning neural network. Nature, 623, 115-121.

Zhou, Y., Feinman, R., and Lake, B. M. (2023). Compositional diversity in visual concept learning. Preprint available on arxiv.2305.19374

Ruis, L., Andreas, J., Baroni, M. Bouchacourt, D., and Lake, B. M. (2020). A Benchmark for Systematic Generalization in Grounded Language Understanding. Advances in Neural Information Processing Systems 33.

Nye, M., Solar-Lezama, A., Tenenbaum, J. B., and Lake, B. M. (2020). Learning Compositional Rules via Neural Program Synthesis. Advances in Neural Information Processing Systems 33.